Demo 2 & 3 - MCU Target, pipelines and structure

Estimated time : 5 minutes

Fill the form

Go to http://157.26.64.222/benchmark.

Form 1 :

- Target :

NXP Cup board, MCU LPC55S69JBD100 - Runtime :

TensorFlow Lite - Model :

small_model.tflite

From 2 :

- Target :

Jetson Orin Nano - Runtime :

TensorRT - Model :

big_model.onnx

Click on the Launch the benchmark button.

Key talking points 1 :

- Target : The target this time is an MCU based on a Cortex M33.

- Runtime : The runtime is ThensoFlow Lite for microcontrollers. This benchmark will take more time than the previous one.

- Model : The selected model is very small, this target has very limited memory resources.

Key talking points 2 :

- Target : The target is a Jetson Orin Nano, a powerful device with a GPU.

- Runtime : The runtime is TensorRT, a runtime optimized for NVIDIA GPUs.

- Model : The model is a big model, for embedded standards. It will take more time to run.

Wait for the results & show the pipeline and the target repository

The results should be available in about 3 minutes.

Got to the pipeline page and show the different steps of the benchmark. Key talking points :

- Pipeline : The pipeline is the set of steps that are executed to run the benchmark.

- Runner : The runner is selected by the VirtualLab based on the Runner tags.

- Steps :

- Prepare : The VirtualLav prepares the environment. It downloads the model and the target sources to the runner.

- Run : The VirtualLab runs the different scripts to perform the benchmark.

- Upload : The runner uploads the result to the VirtualLab.

- End : The VirtualLab displays the result to the user.

Go to the targets repository page and show the different repository. Select and show the JetsonOrinNano_TRT.

Here is an MCU repository for the LPC55S69_TFLite.

Key talking points :

- Structure : The structure of the target repository is important. It contains all the scripts and files needed to run the benchmark.

- AI_Support : Prepare the environment and generate the appropriate code for the target if needed.

- AI_Build : Build the target code.

- AI_Deploy : Deploy the model and the target code to the target.

- AI_Manager : Run the benchmark and measure the performance.

- AI_Project : Contains the target project and code base.

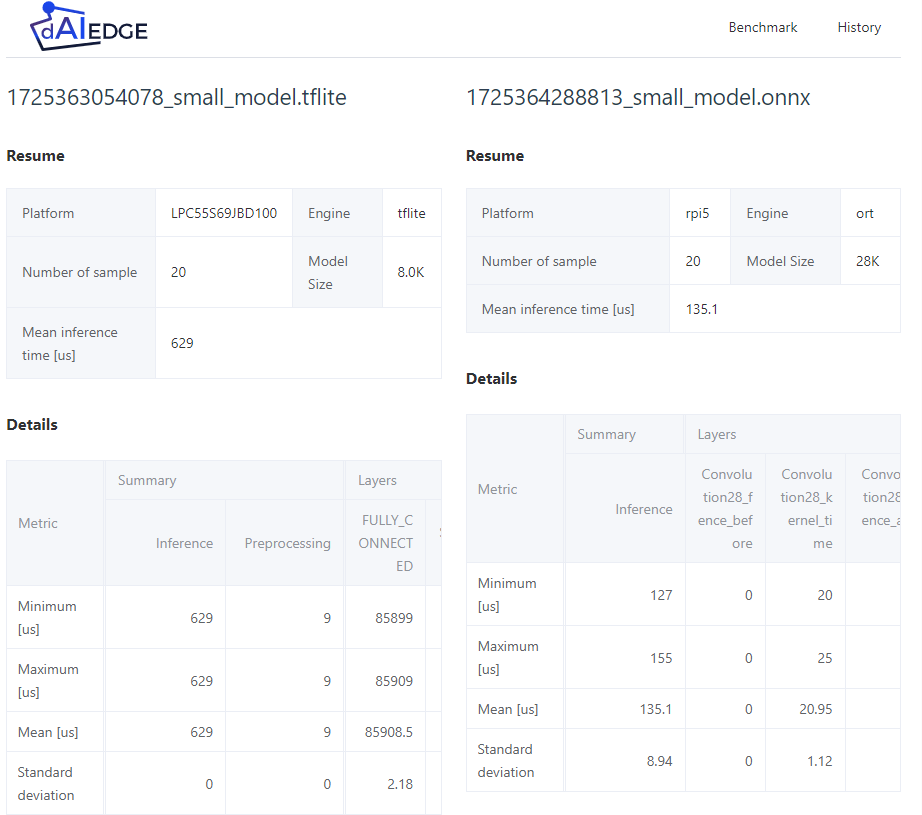

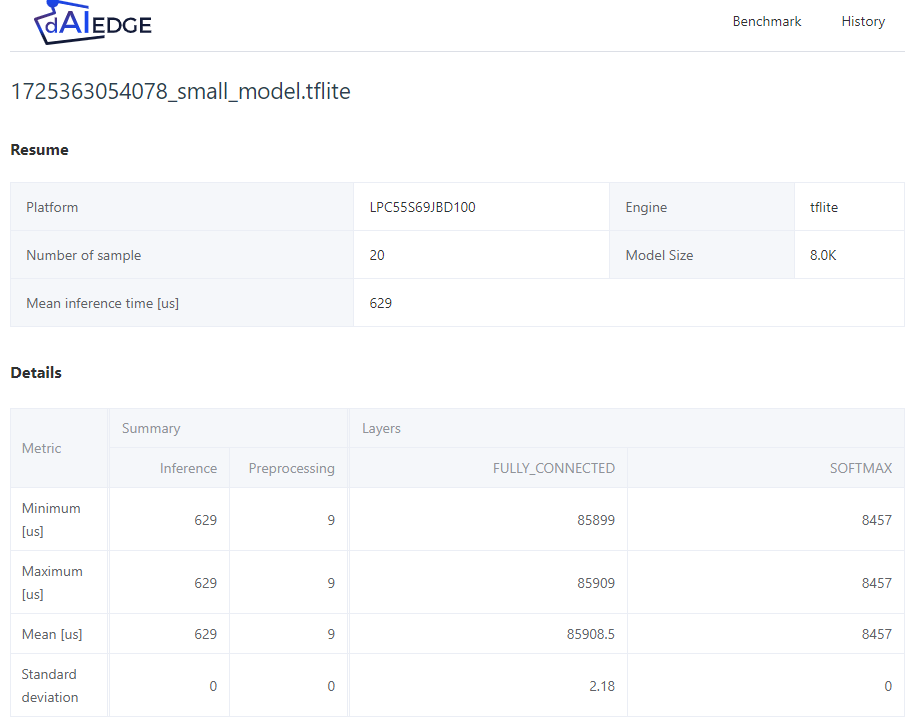

Analyze the results

Key talking points :

- Small Model : The model is very small, the inference time is low.

In case of problems LPC55S69JBD100 :

- Here is the resulting JSON.

- Here is the pipeline of the same benchmark.

In case of problems Jetson Orin Nano :

- Here is the resulting JSON.

- Here is the pipeline of the same benchmark.

Compare the results

Go to the history page and compare the two results.

Key talking points :

- Models : The models used and the targets are different. Thus comparing the results is not relevant. But it is here just to illustrate the feature.