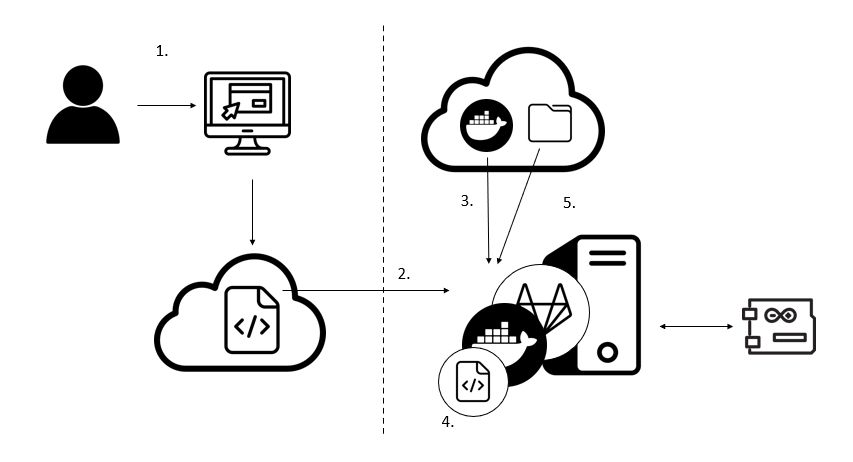

VLab Architecture

This section describes the of the VLab archtiecture, its components and their interaction.

Web interface

At the top level of its architecture, the dAIEdge-VLab provides a benchmarking solution to end-users through a web interface. Using the interface, users can run some beanchmark. A benchmark requires that the user upload one pre-trained model, select a target, select an inference engine available for the target, and launch benchmark accordingly. The results will display after the benchmark is done.

For a deeper understanding, we recommend familiarizing yourself with the web interface.

Target and host machine

At the lower level of the architecture, hardware boards —referred to as targets— are configured to enable model benchmarking. A target must be able to run inferences for the previously uploaded model and collect some performance metrics, this could involve the installation of an inference engine such as TFLite or ONNX Runtime.

To execute the necessary scripts for running VLab benchmarking steps (we will discuss these script later), a target is connected to a host machine on which the scripts are executed.

For targets that cannot support the installation of benchmarking tools natively, such as MCUs, the host gather the metrics from the target and generates benchmark report. See example provided for MCUs.

Target repository

The scripts for running VLab benchmarking steps are not stored directly on the host machine but reside in a separate GitLab repository, referred to as the “target repository”. When a benchark is executed, the target repository is cloned onto the host machine and the scripts are executed.

Since the benchmarking process is the same for all targets, the target repository follows a strict folder structure.

The implementation of these scripts is target-dependent and must be customized according to the target you wish to integrate.

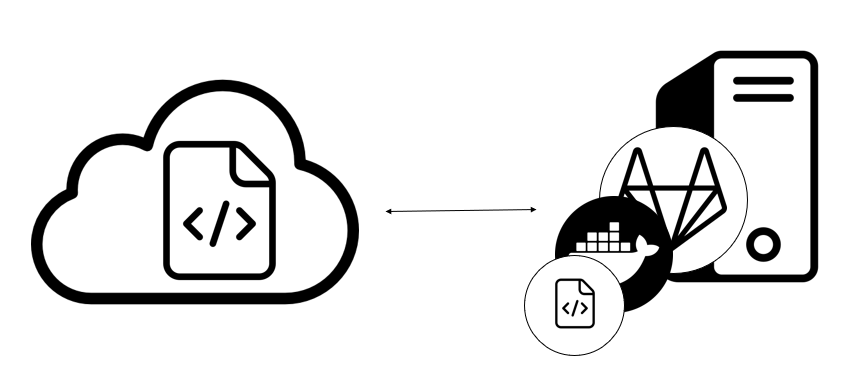

dAIEdge-VLab CI/CD pipeline

At the mid-level of the architecture, launching a benchmark with a specific configuration (model, inference engine, target) triggers the dAIEdge-VLab CI/CD pipeline with the corresponding variables/tags (model, inference engine, target). This benchmarking CI/CD pipeline is a general pipeline that can sequentially execute the benchmarking scripts of any target that follows the target folder structure. It resides on the VLab server, but when a benchmark is launched, the CI/CD pipeline runs on the host machine of the specified target.

Checkout the dAIEdge-VLab CI/CD pipeline script for a better undertanding.

Gitlab runner and Docker container

To link the target-specific host machines with the VLab server, Gitlab runner is used. For each target, a runner is registered in the VLAb server with specific tags (hardware board, inference engine). The registered runner must be up and running on their specific host machine.

When a benchmark is executed, the target specific runner (accoring to the tags) is triggered. The runner will exectue the dAIEdge-VLab CI/CD pipeline on the host machine, in a Docker container based on a Docker image. The dAIEdge-VLab CI/CD pipeline execute the scripts described in the target repository.

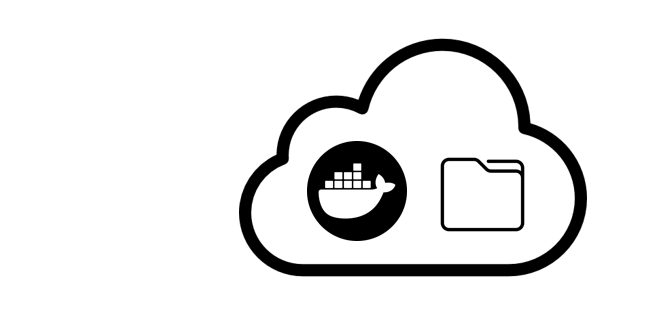

Docker Image

Finally, this Docker image contains the necessary credentias to clone the target repository and all the environment dependencies needed to run the scripts. Therefore, it resides in the target repository and is pulled from there by the runner. The image can be created either manually or through the CI/CD pipeline within the target repository.

Benchmark lifecycle

Let’s have an overview of the interactions between the elements of the architecture during the benchmarking process.

- An end-user launches a benchmark from the web interface, providing a pre-trained model, an inference engine, and a target.

- The VLab server triggers the dedicated runner (with the corresponding target and inference engine tags) running on a host machine.

- The runner pulls the Docker Image from the target repository and use it to start a Docker container.

- Within the Docker container, the runner executes the benchmarking CI/CD pipeline provided by the VLab server.

- The benchmarking CI/CD pipeline pulls the target repository and runs the benchmarking scripts using the variables stored in the Docker container.

- The bechmarking scripts sends the model onto the target device, benchmark it, post process the data and generates the appropriate artifacts. The artifacts are stored in the VLab.

- User access the benchmarking results throught the web interface