On Device Training

This section shows you a simple application to test on device training capabilities of edge devices using the dAIEdge-VLab Python API.

What does this application do ?

In this application example, we will prepare a model with Tensorflow, prepare a train dataset, prepare a validation dataset and train the model on a remote device. Finally, we will analyze the results given by the remote device.

The following diagram shows the pipeline of this application :

sequenceDiagram

participant User

participant dAIEdge-VLab

participant Target

User->>User: Prepare Datasets

User->>User: Prepare Model for training

User->>dAIEdge-VLab: Model, Datasets, Config, Target, Runtime

dAIEdge-VLab->>Target: Model, Datasets, Config

Target->>Target: Perfom the training on device

Target->>dAIEdge-VLab: report, logs, trained model

dAIEdge-VLab->>User: report, logs, trained model

User->>User: Print loss evolution

User->>User: Test trained model

User->>User: Compute accuracy metrics

Main actions :

- Prepare the datasets on the user end

- Pre-train the model on the user end (optional)

- Setup the model for on device training

- Send the model and datasets to the dAIEdge-VLab

- Train the model on the remote device

- Retrive the trained model from the remote device

- Analyze the resutls

What problem will be solved ?

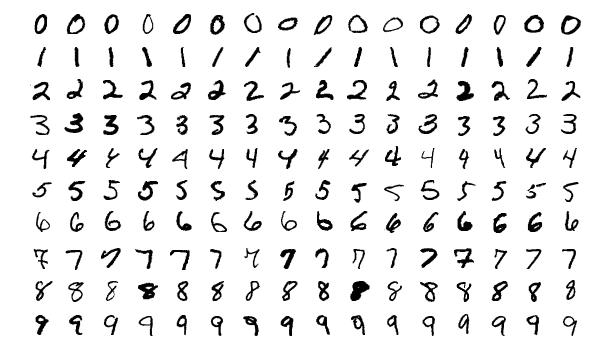

In this simple application, we will train a model to recogize numbers from an image. We will use the MNIST dataset as based for the training and testing of the model. The image bellow illustrates the kind of images that compose the dataset. Our model will simply take an image as input and output a prediction of the number that was on the image.

The output of the model will be one hot encoded. This means that we will have one output node per class we want to detect. In this case this is 10 classes as they are 10 numbers from 0 to 9. Knowing the input and ouput shape is important for the preparation of the dataset we will provide to the dAIEdge-VLab.

Available target and framework

On-device training is currently supported on the Raspberry Pi 5 platform ODT provides support for two runtime environments: TFLite and ORT. Each runtime follows a different set of preparation steps. Code examples are available for both the TFLite and ORT approaches.